User:Alvonruff/Debugging Remarks

Remarks on Debugging the Current Code Base

There are a number of issues slowing down the porting effort, which makes the debugging process slow.

- SESSION Arguments. Since we've introduced the SESSION variable, many ISFDB scripts are no longer executable from the command line. The Session class pulls the arguments from the environment variable QUERY_STRING, which is not set when executing from the command line. When command line execution is not possible, then the new script must be installed, and if a simple syntax error appears within the file, then the browser simply returns "Internal Server Error", with no clue as to where the issue is. These types of issues are easily observable when running from the command line. To re-enable command line execution, I added the following to the end of ParseParameters in the Session class:

# Allow for command line invocation

if (cgi_path == None) and (self.query_string == None):

num_args = len(sys.argv)

for i in range(1, num_args, 1):

self.parameters.append(sys.argv[i])

- Try/Except Usage. We have a tendency to use try/except to cover many possible potential issues within a large code block. Here's an actual example from se.py, which contains one error when trying to run under Python3:

try:

type = form['type'].value

# Save the double-quote-escaped version of the original search value

# to be re-displayed in the search box

search_value = form['arg'].value.replace('"','"')

# Replace asterisks with % to facilitate wild cards

arg = str.replace(normalizeInput(form['arg'].value), '*', '%')

# Double escape backslashes, which is required by the SQL syntax

arg = string.replace(arg, '\\', '\\\\')

user = User()

user.load()

if not user.keep_spaces_in_searches:

arg = str.strip(arg)

if not arg:

raise

except:

PrintHeader("ISFDB Search Error")

PrintNavbar('search', %'%', 0, 'se.cgi', )

print("No search value specified")

PrintTrailer('search', , 0)

sys.exit(0)

- When this runs, all we see is "ISFDB Search Error, No search value specified", with no clue as to which clause might have caused the error. The typical approach then is to copy all of the try code, copy it to a position above the try statement, and then reformat the lines. Rather than getting into a philosophical debate about the overusage of try/except, we can make these blocks more debuggable. For instance, if we change the except clause above to:

import traceback

except Exception as e:

e = traceback.format_exc()

PrintHeader("ISFDB Search Error")

PrintNavbar('search', , 0, 'se.cgi', )

print("No search value specified")

print('Error: ', e)

PrintTrailer('search', , 0)

sys.exit(0)

- We now see the following error message:

Error: Traceback (most recent call last): File "/var/www/cgi-bin/se.cgi", line 263, in arg = string.replace(arg, '\\', '\\\\') AttributeError: module 'string' has no attribute 'replace'

- Showing that we forgot to replace 'string' with 'str'.

- SQL Debugging. As documented elsewhere, there is an issue with mysql.connector in extracting DATE values from MySQL when the month or day is zero. We have an SQL syntax fix for that, but there are many hundreds of SQL statements in the ISFDB, so only a small percentage have been addressed so far. When debugging a script (adv_search_results.py comes to mind, since that is my current problem area), one simply sees the following error:

TypeError: must be str, not datetime.date

args = ('must be str, not datetime.date',)

with_traceback = <built-in method with_traceback of TypeError object>

- So we know where the final problem occurred, but not which SQL method needs to be altered. Sometimes it is trivial to find, but on other occasions it requires looking through library.py, common.py, and some number of *Class.py files, which takes a fair amount of time. Additionally, I would like to make a set of SQL unit tests, but don't know what typical valid arguments look like.

- As such, I've added an SQL logging feature to the Session class, which can be enabled in SQLparsing.py by setting SQLlogging to 1. This records a running log in SESSION, and can output a bulleted list of the SQL function calls that were made by a particular script, outputting them in a new section added by PrintTrailer(). For scripts that are generating faults, a call to SQLoutputLog() can be temporarily inserted just above the fault point in the code. Relevant variables/methods:

- SQLlogging (SQLparsing.py) - Turns the feature on/off.

- SQLlog(arg) - Logs an SQL function call.

- SQLoutputLog() - Will output the log stored in the SESSION class. Each line is prepended with an HTML LI to print a bullet list in the browser.

General Unicode Debugging

I don't expect others to be debugging unicode issues, as they only arise during big projects, like updating the LAMP stack, moving to Python3, or moving everything to UTF-8, so these notes are mostly to remind myself. The following components all have something to say about character sets within the ISFDB stack:

- Browser - Displays what the document charset statement tells it to

- Apache - Currently set to latin-1 via AddDefaultCharset ISO-8859-1 in httpd.conf

- ISFDB - Currently set to latin-1 via an charset=iso-8859-1 statement in the first meta tag. The is set in localdefs.py as UNICODE.

- mysql.connector - Currently set to 'latin1', via db.set_charset_collation('latin1')

- MySQL - Currently set to 'latin1'

Nonetheless, what we're currently seeing on ISFDB2 while running on Python3 look suspiciously like UTF-8 decoded as Latin-1 (to experiment with strange output characters associated with encode/decode mismatches, try this tool). To verify this I'll use René Quillivic as an example. The LATIN-1 construction of the author's name is:

52 65 6e e9 20 51 75 69 6c 6c 69 76 69 63

Where the individual characters are encoded thus:

52 = R 65 = e 6e = n E9 = é 20 = Space 51 = Q 75 = u 69 = i 6c = l 6c = l 69 = i 76 = v 69 = i 63 = c

The UTF-8 construction of the author's name is:

52 65 6e c3 a9 20 51 75 69 6c 6c 69 76 69 63

Where the individual characters are encoded thus:

52 = R 65 = e 6e = n C3 A9 = é 20 = Space 51 = Q 75 = u 69 = i 6c = l 6c = l 69 = i 76 = v 69 = i 63 = c

Debugging Unicode issues can be difficult, as the browser will decode (based on the charset statement in the document) whatever Python is doing, so you can't trust the output. The best way to debug is from the command line, so that Apache and the Browser are removed from the problem. I have added the following code to common/library.py

def debugUnicodeString(input, filename):

f = open(filename, "w")

f.write(input)

f.close()

Which will push the target string into a file. This is invoked as follows:

debugUnicodeString(self.au_data[AUTHOR_CANONICAL], "AUTHOR_UNICODE")

After running from the command line, the file AUTHOR_UNICODE will appear. You also can't trust what you see in vi/vim, as that can be altered by its own character set command (set fileencodings=utf-8). Instead the actual bytes can be viewed on linux with: od -X AUTHOR_UNICODE. It will produce the following output:

[alvonruff@stage biblio]$ od -X AUTHOR_UNICODE 0000000 c36e6552 755120a9 696c6c69 00636976 0000017

The byte order within each word is reversed due to the little-endian architecture of the underlying machine. If the endianess is disturbing, you can issue od -X --endian=big AUTHOR_UNICODE, which generates:

[alvonruff@stage biblio]$ od -X --endian=big AUTHOR_UNICODE 0000000 52656ec3 a9205175 696c6c69 76696300 0000017

It is now easy to tell that this string is encoded with UTF-8. UTF-8 happens to be the default string encoding with Python3, so either the mysql.connector charset specification is being done incorrectly, or something more insidious happens when python3 picks up the string.

This can be isolated by writing a unittest in my new formal test suite:

class MyTestCase(unittest.TestCase):

def test_002_SQLloadAuthorData(self):

print("TEST: SQLloadAuthorData")

author = SQLloadAuthorData('185901')

printAuthorRecord(author)

where printAuthorRecord() also calls debugUnicodeString(). Under Python3 this generates:

od -x --endian=big AUTHOR_PYTHON3 0000000 5265 6ec3 a920 5175 696c 6c69 7669 6300

Whereas under Python2 this generates:

od -x --endian=big AUTHOR_PYTHON2 0000000 5265 6ee9 2051 7569 6c6c 6976 6963

Now, if I turn off unicode in the connector, like this:

db = mysql.connector.connect(user=USERNAME, password=PASSWORD, host=DBASEHOST, database=DBASE, charset='latin1', use_unicode=False)

a lot of stuff obviously fails, but I do see the following:

TEST: SQLloadAuthorData ID = 185901 CANONICAL = bytearray(b'Ren\xe9 Quillivic')

Whereas, if I change the followup charset configuration from:

db.set_charset_collation('latin1', 'latin1_swedish_ci')

to:

db.set_charset_collation('utf8mb4')

We see:

TEST: SQLloadAuthorData ID = 185901 CANONICAL = bytearray(b'Ren\xc3\xa9 Quillivic')

So all of the config parameters on the connector seem to be doing the right thing. The only thing left is whether print() itself is using some kind of encoding. print() send output on the stdout file descriptor, so if we check stdout:

Python 3.6.8 (default, Nov 17 2021, 16:10:06) [GCC 8.5.0 20210514 (Red Hat 8.5.0-3)] on linux Type "help", "copyright", "credits" or "license" for more information. >>> import sys >>> print(sys.stdout) <_io.TextIOWrapper name='<stdout>' mode='w' encoding='UTF-8'>

Well, hello there.

>>> import os

>>> print([(k, os.environ[k]) for k in os.environ if k.startswith('LANG')])

[('LANG', 'en_US.UTF-8')]

>>> import locale

>>> print(locale.getlocale())

('en_US', 'UTF-8')

From the shell:

$ env | grep LANG LANG=en_US.UTF-8

If we set LANG as follows:

export LANG=en_US.ISO8859-1

Then the test case now passes. So I put the following in /etc/httpd/conf/httpd.conf:

SetEnv LANG en_US.ISO8859-1

And things look pretty good now. The sad part is that since all I/O to stdout was affected, the bytes stored in the file system WERE ALSO A LIE. Remember to put the following in your .bashrc for future command line use:

export LANG=en_US.ISO8859-1

XML Unicode Debugging

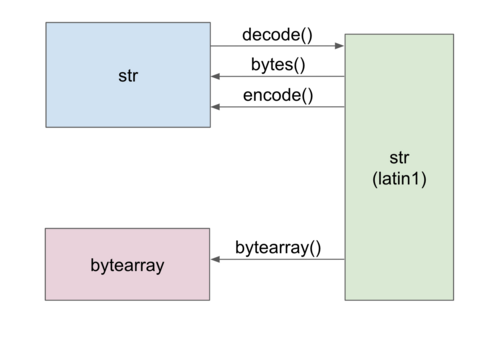

So in order to understand the XML issues on Python3, we need to cover the situation with unicode codecs on Python3 vs Python2. The general concept of codecs separates containers from encoders. For instance, in the audio domain, an audio file can be encoded with the Vorbis codec, but it is held within a WEBM container. For python unicode strings, the encoder/decoder are language targets like "latin1" or "utf-8", while the container is a built in type, like "str". In Python2, the container/type space was very simple:

If you begin with an object of type str, and perform an encode() on it, it generates another str. If you perform a decode() on that str, it generates yet another str. The situation on Python3 is far more complex:

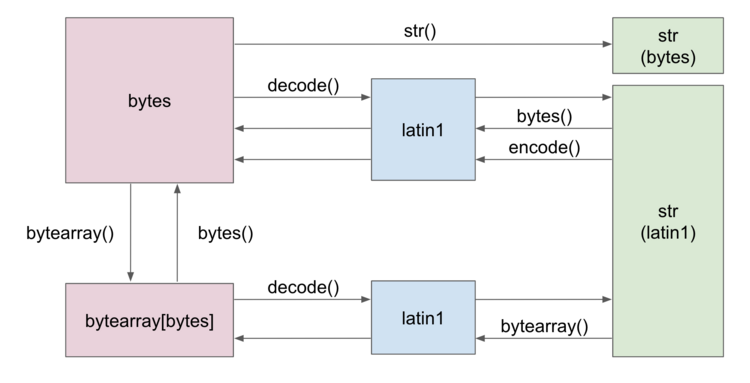

In Python3 there are 3 container/types: str, byte, and bytearray. If one starts with a str, you can only perform an encode() on that string - you cannot use decode() on the type str (The str object does not have the method decode). The encode() generates a new object of type bytes. Likewise, a decode() can only be performed on objects of type bytes or bytearray, and will always generate an object of type str. Objects of type bytes do not have the method encode.

Our code for GetElementValue() looks like this:

def GetElementValue(element, tag):

document = element[0].getElementsByTagName(tag)

try:

value = document[0].firstChild.data.encode('iso-8859-1')

except:

value =

return value

Under Python2 the encode() call transfers the data object into another string. So value has the type str. Under Python3, the encode() call causes an object of type bytes to be created, so value has the type bytes. So, to move the data back into a str type, we need to insert a decode():

def GetElementValue(element, tag):

document = element[0].getElementsByTagName(tag)

try:

value = document[0].firstChild.data.encode('iso-8859-1')

value = value.decode('iso-8859-1')

except:

value =

return value

There are probably 3 ways to fix this:

- Add a decode() statement as above, to change the type from bytes to str

- Add an str() case, which should also change the type from bytes to str.

- Remove the original encode call. This is probably not necessary under Python3, as every string always has an encoding, and this particular string probably picked up latin1 from MySQL.

The most portable between Python2 and Python3 would be the str method (a str(str) is a str). This fix is a prerequisite for moving on to the edit and mod scripts.